Highly anticipated: Nvidia has finally unveiled the new GeForce 50 series, along with DLSS 4 technology. Four high-end GPUs were announced, and it won’t be long until you can get your hands on one. The RTX 5080 and RTX 5090 are set to launch first on January 30, followed by the RTX 5070 and RTX 5070 Ti in February. Now, it’s time to share our thoughts on what was revealed during the CES 2025 keynote – including all the specs, pricing, and performance claims.

The new GPUs

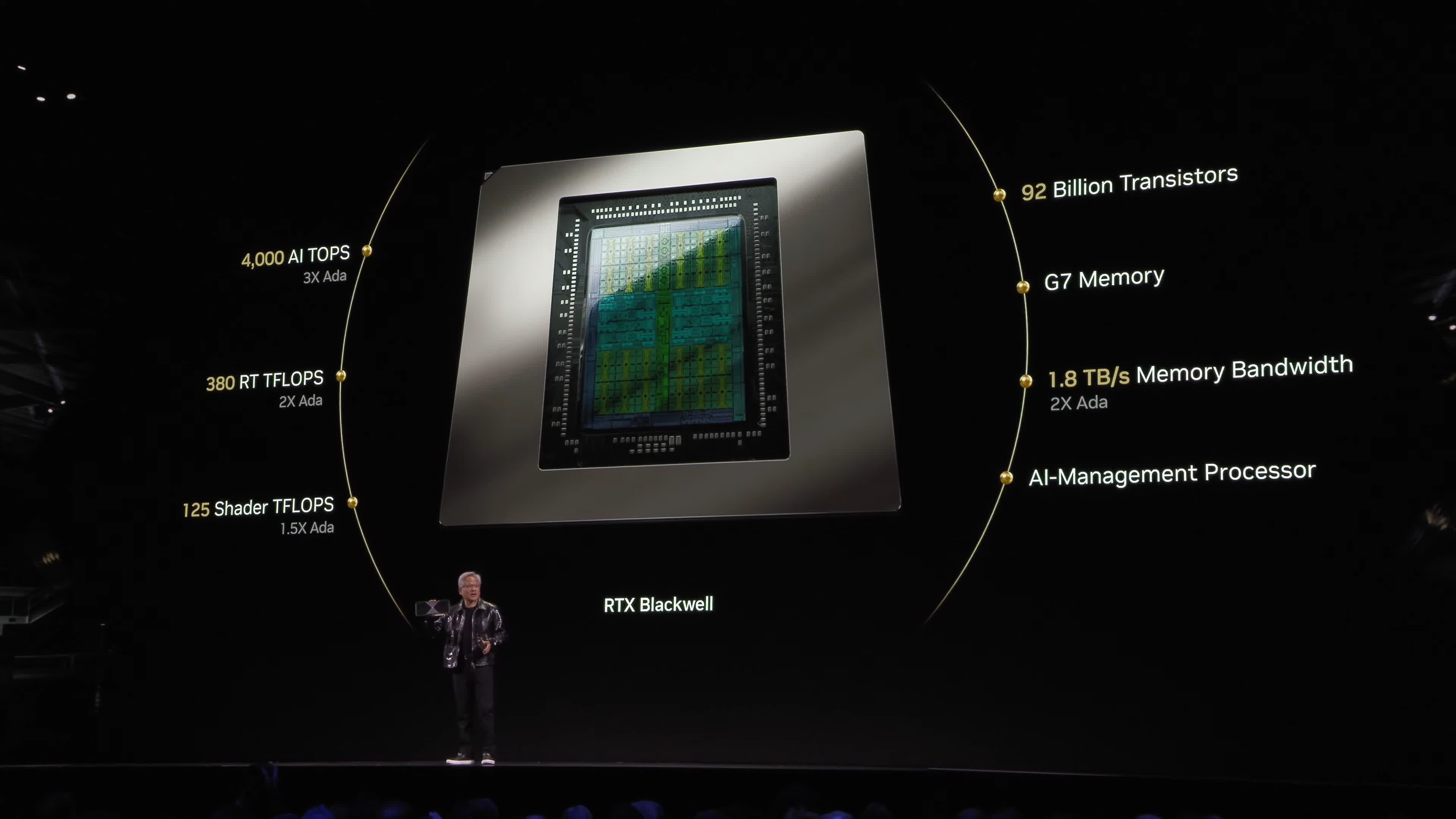

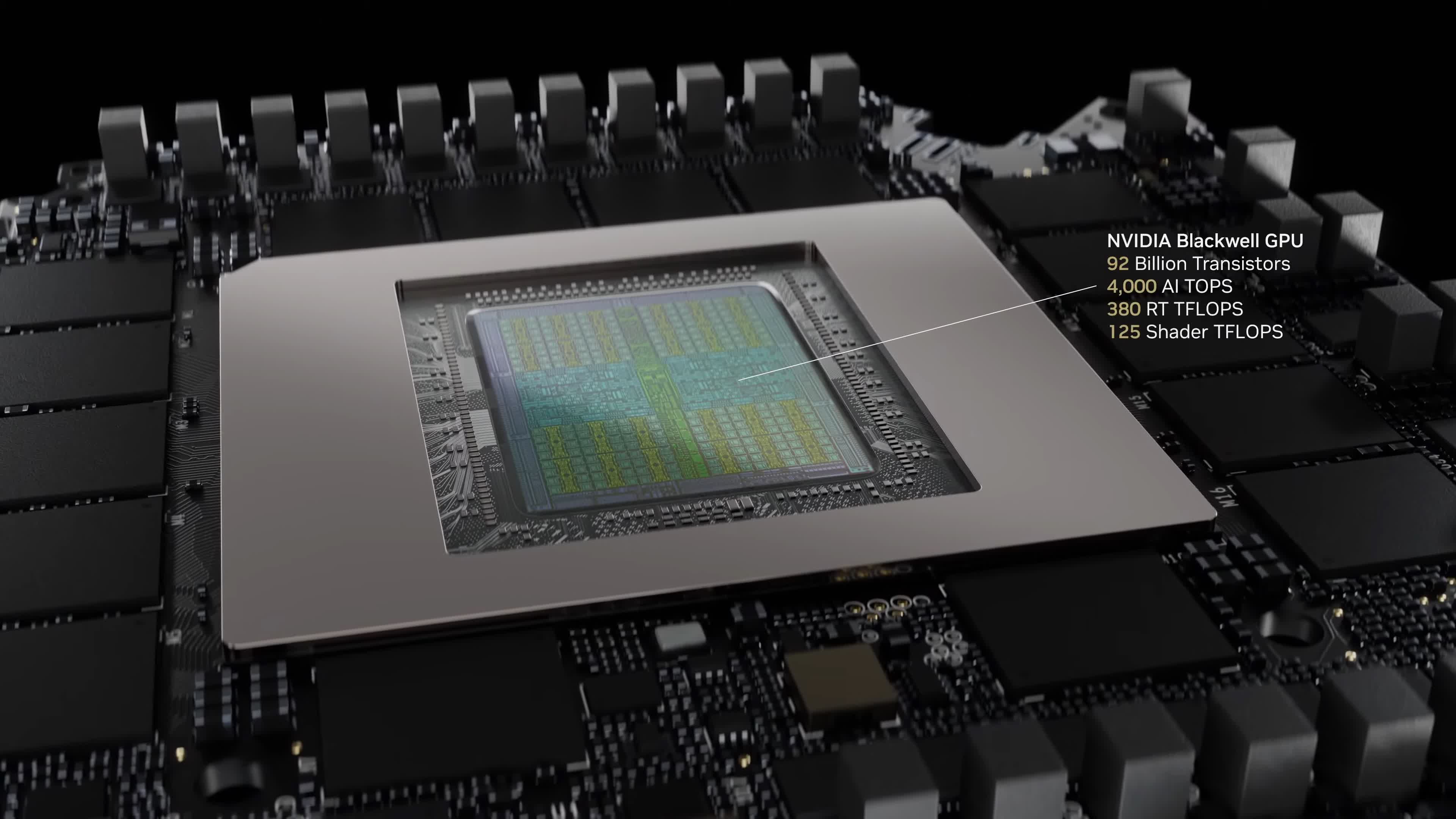

The new RTX 5090 flagship comes packing significantly more hardware over its predecessor. Not only does this GPU use Nvidia’s new Blackwell architecture, but it also packs significantly more CUDA cores, greater memory bandwidth, and a higher VRAM capacity. The SM count has increased from 128 with the RTX 4090 to a whopping 170 with the RTX 5090 – a 33% increase in the core size. However, GPU boost clocks have decreased slightly, from 2.5 GHz to 2.4 GHz.

The memory subsystem is overhauled, now featuring GDDR7 technology on a massive 512-bit bus. With this GDDR7 memory clocked at 28 Gbps, memory bandwidth reaches 1,792 GB/s – a near 80% increase over the RTX 4090’s bandwidth. It also includes 32GB of VRAM, the most Nvidia has ever provided on a consumer GPU.

With Blackwell still built using TSMC 4nm technology, the board power rating has risen to accommodate these cores and memory, increasing to a mammoth 575W – 28% higher than the RTX 4090.

The RTX 5080 uses a much smaller GPU configuration, based on an entirely different GPU die, with just 84 SMs – four more than the RTX 4080 Super from the last generation.

Almost everything about this model is half of the RTX 5090: just under half the CUDA cores, half the memory bus width at 256-bit, half the VRAM capacity at 16GB, and a little over half the memory bandwidth at 960 GB/s through the use of GDDR7 clocked at 30 Gbps. Historically, this configuration would align with an RTX 5070-tier GPU, so positioning it as a 5080 feels like a disappointing move for gamers.

The RTX 5070 Ti is further cut down, featuring 70 SMs – four more than the previous generation’s RTX 4070 Ti Super. However, boost clocks have dropped from 2.61 GHz to 2.45 GHz. The memory bus width remains the same as the RTX 5080’s 256-bit configuration, meaning it also has 16GB of GDDR7 memory. This time, the memory is clocked at 28 Gbps, resulting in 896 GB/s of bandwidth. The 5070 Ti is rated at 300W, while the RTX 5080 is rated at 360W.

Then there’s the RTX 5070. This model features 48 SMs, just two more than the RTX 4070 from the previous generation. It includes a 192-bit memory bus and 12GB of VRAM, which feels insufficient for this tier of product. Boost clocks are only slightly higher than the RTX 4070, increasing from 2.48 GHz to 2.51 GHz, with a total power rating of 250W.

All new GeForce 50 series GPUs include updated connectivity, such as PCIe 5.0 x16 for the announced models and DisplayPort 2.1b UHBR20, which introduces support for active cables. Additionally, they feature HDMI 2.1 and a single 16-pin power connector on each model.

GPU performance and pricing

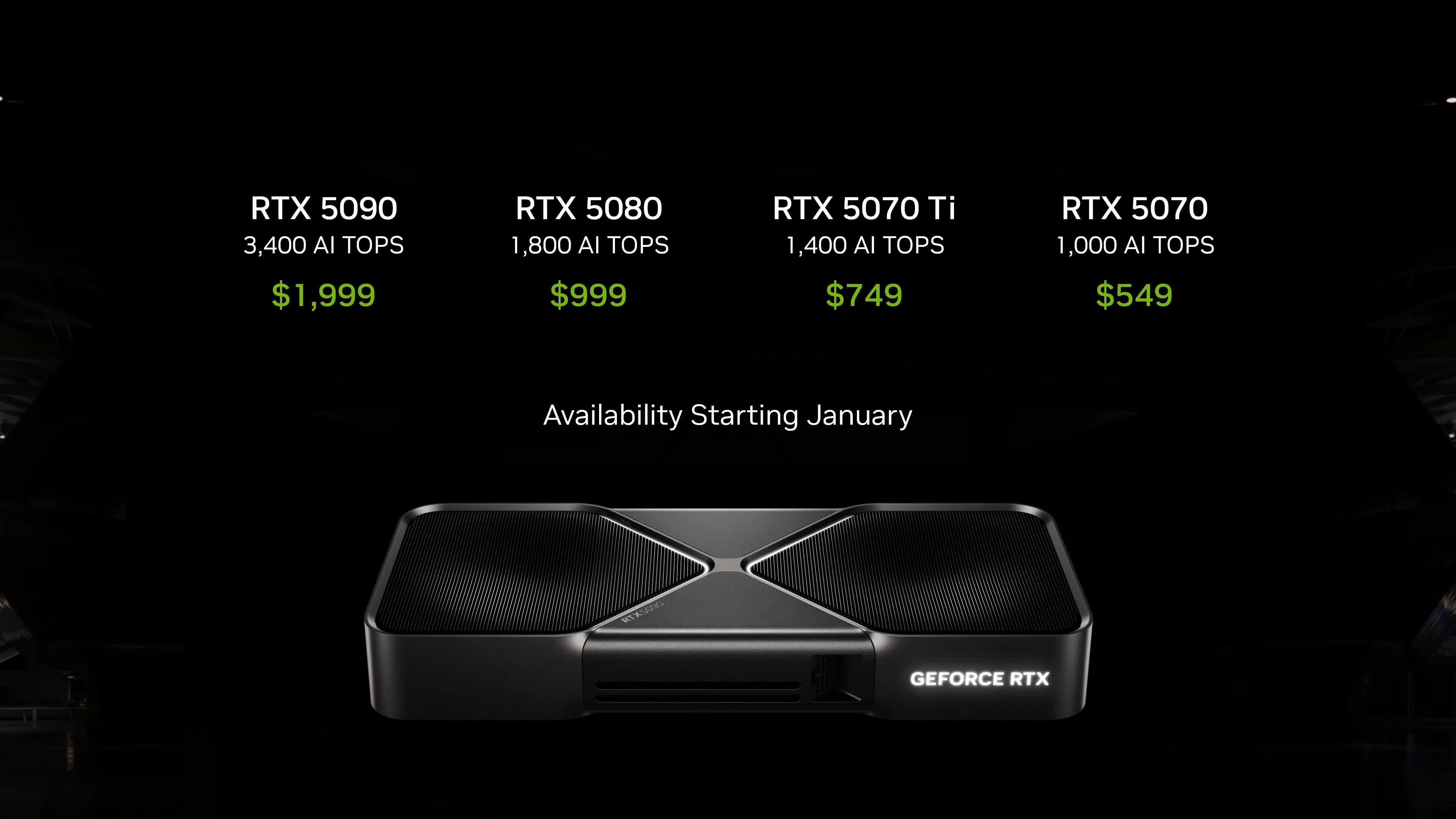

Now let’s dive into the most important aspects of Blackwell. There’s both good and bad here. The GeForce RTX 5090 is outrageously expensive, as expected, priced at $2,000. Meanwhile, the RTX 5080, with half the GPU hardware, is priced at $1,000 – less extreme than many rumors suggested, though still quite expensive.

The surprises for us were the RTX 5070 Ti at $750 and the RTX 5070 at $550 – both $50 lower than their counterparts from the previous generation at launch.

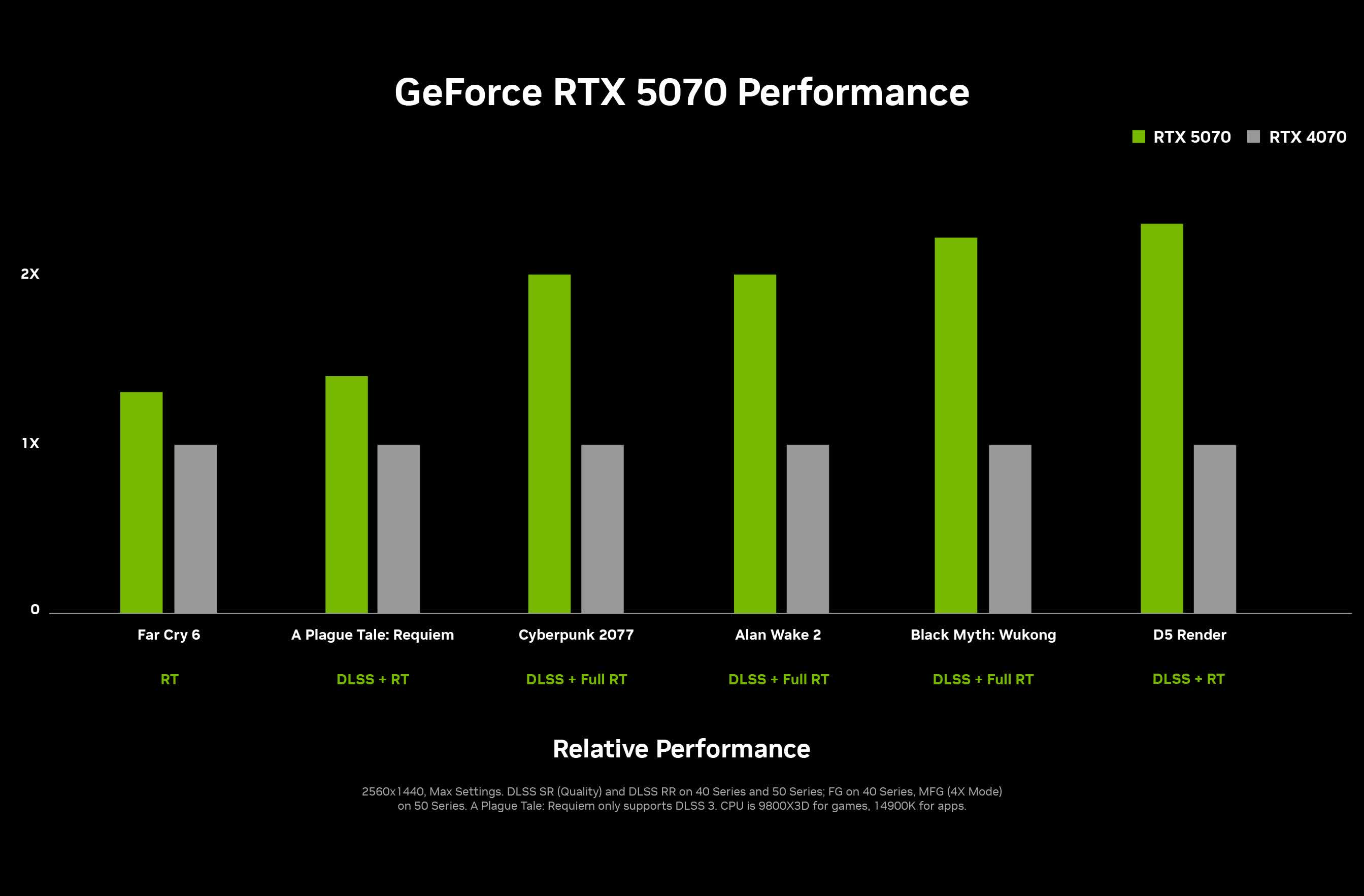

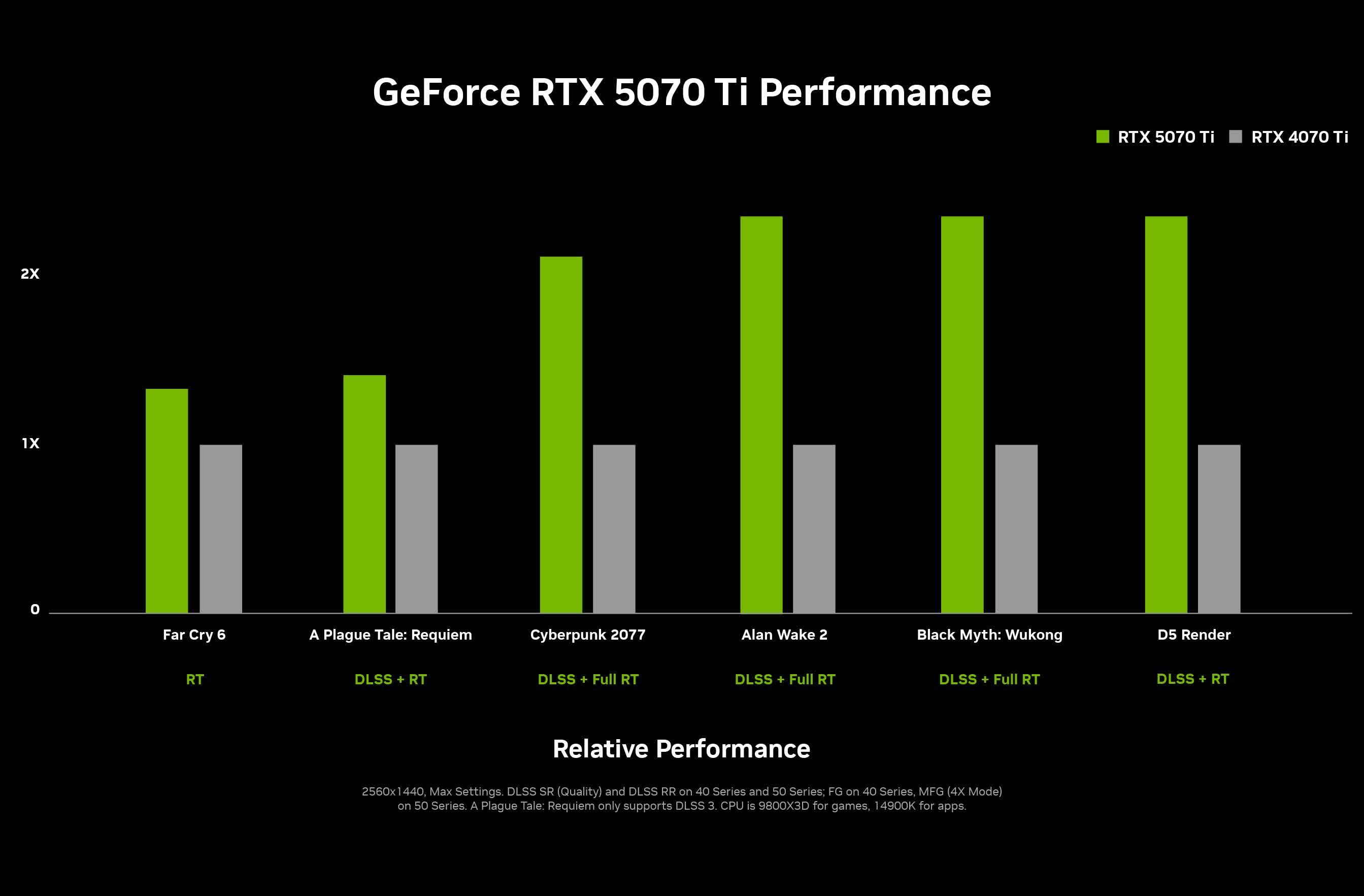

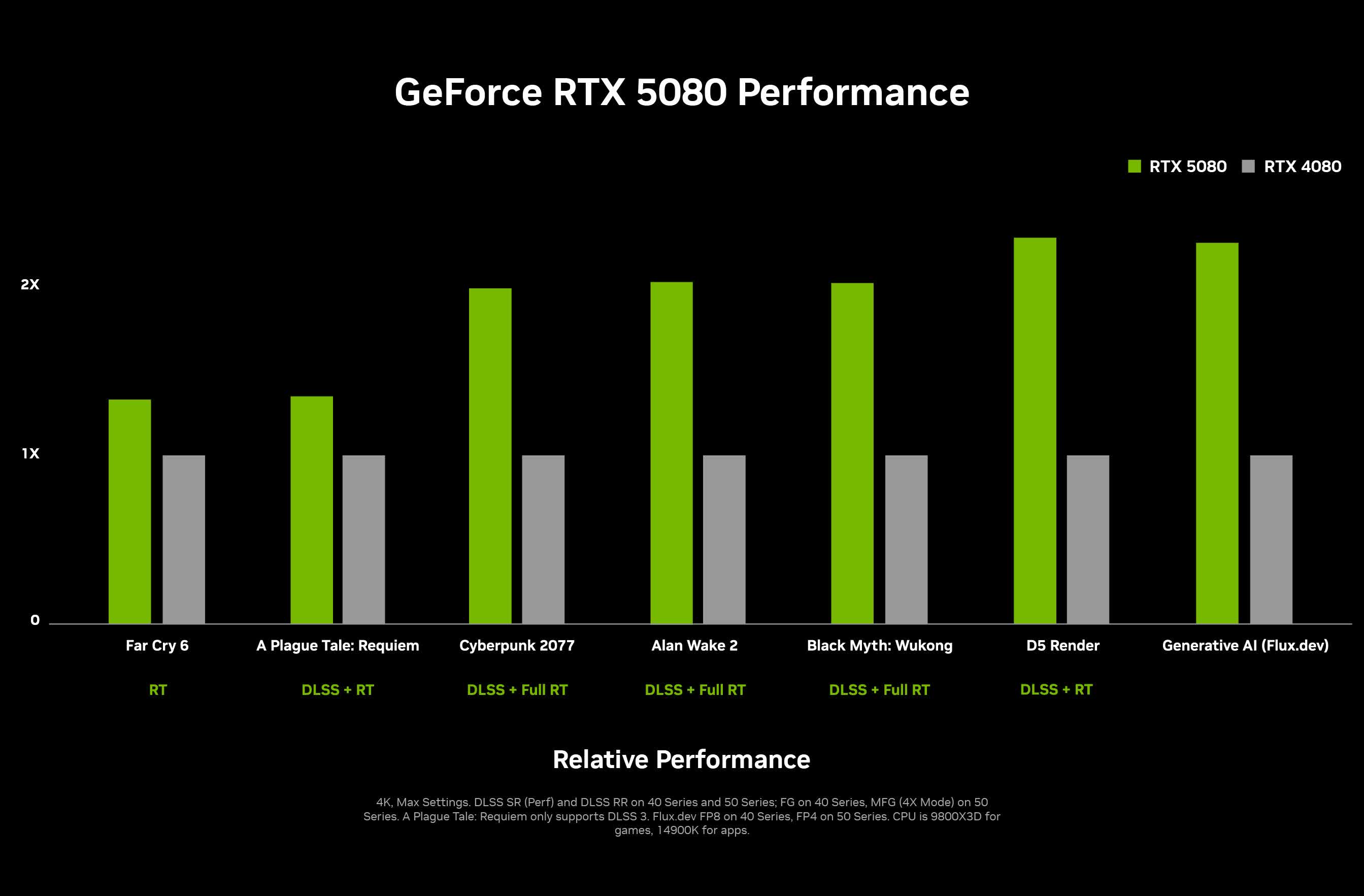

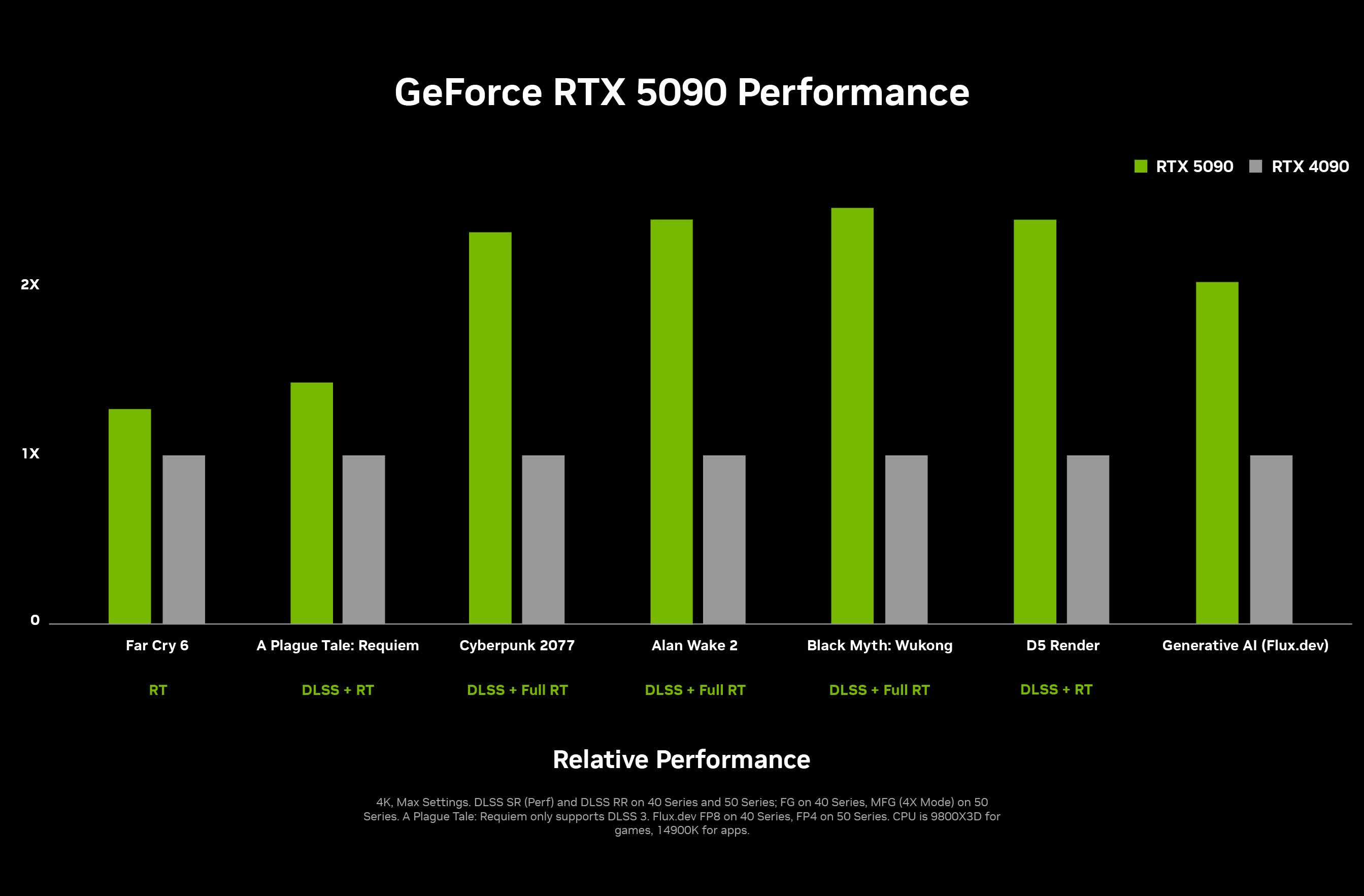

As for the performance claims… Nvidia has – as usual – used its marketing to obscure actual gaming performance. RTX 50 GPUs support DLSS 4 multi-frame generation, which previous-generation GPUs lack. This means RTX 50 series GPUs can generate double the frames of previous-gen models in DLSS-supported games, making them appear up to twice as “fast” as RTX 40 series GPUs. But in reality, while FPS numbers will increase with DLSS 4, latency and gameplay feel may not improve as dramatically.

The claim that the RTX 5070 matches the RTX 4090 in performance seems dubious. Perhaps it could match in frame rate with DLSS 4, but certainly not in raw, non-DLSS performance.

In their charts, Nvidia highlighted four games and apps supporting DLSS 4: Cyberpunk 2077, Alan Wake 2, Black Myth Wukong, and D5 Render. However, we’ll set those aside for now since we lack proper performance data for those titles. The performance claims for the other games matter more.

The claim that the RTX 5070 matches the RTX 4090 in performance seems dubious. Perhaps it could match in frame rate with DLSS 4, but certainly not in raw, non-DLSS performance. Based on Nvidia’s charts, the RTX 5070 seems 20 – 30% faster than the RTX 4070 at 1440p.

This would place the RTX 5070 slightly ahead of the RTX 4070 Super for about $50 less, or alternatively, 20 – 30% faster than the RTX 4070 for the same price. Depending on how it performs relative to the RTX 4070 Super, this could offer reasonable, though slightly underwhelming, value. A 10% performance improvement over the 4070 Super and a 9% price cut would result in roughly a 20% improvement in cost per frame.

Nvidia made similar claims for the RTX 5070 Ti, estimating a 20 – 30% performance improvement over the 4070 Ti at 1440p (ignoring the misleading DLSS 4 numbers). This would result in an even better cost-per-frame improvement compared to the RTX 4070 Ti, likely exceeding 30%, though it will shrink closer to 20% relative to the 4070 Ti Super.

The RTX 5080 shows a similar story: a 20 – 30% performance improvement over the RTX 4080. Given the RTX 4080 Super was only about 5% faster than the RTX 4080, this results in a roughly 20% increase in cost-per-frame efficiency.

As for the RTX 5090, the non-DLSS 4 numbers indicate a 20 – 40% performance gain over the RTX 4090. However, since the RTX 5090 is priced 25% higher, most games would need to achieve closer to a 50% gain to yield a 20% improvement in cost-per-frame.

Nvidia’s testing was conducted at 4K using DLSS Performance mode, which essentially renders at 1080p. This could lead to CPU bottlenecks and underutilization of the massive RTX 5090. We suspect that could explain the stronger performance gains in path-traced DLSS 4 games compared to traditional titles. Of course, we’ll have to wait for reviews to see how that plays out.

Our gut feeling here and initial reaction is that Nvidia seems to be delivering around a 20% cost-per-frame improvement, and therefore a 20% value increase, across the board when comparing to RTX 40 Super GPUs. That is certainly better than the RTX 40 series, which in many situations offered absolutely nothing for gamers in terms of value. Hopefully, this apparent 20% value increase is more like 30% in reality, in which case this looks like a good generation. If the cards fall closer to 20% or, worse, lower, it will be a disappointment. But so far, there is enough to get at least a little bit excited about.

A growing gap, plus other architectural improvements

One of our concerns with this generation is the absolute chasm Nvidia has created between the highest-tier model and the next model down. It’s pretty absurd that the RTX 5090 is basically double the hardware of the RTX 5080. It’s almost like we’re back in the days of dual GPUs, except this time it’s a massive single GPU die. This makes the RTX 5080 feel like quite a weak product in comparison, even though there is a substantial price difference to account for that.

Gone are the days of the 80 class being just a small reduction in capabilities relative to the best model. That gap is only growing, ensuring that gamers are forced to pay a ludicrous amount of money to access performance that’s materially better than the best of the 40 series.

This has flow-on effects for the rest of the product stack, which also becomes comparatively weak relative to the best GPU. However, there’s at least some good news here because, in the previous generation, the RTX 4080 had 26% more CUDA cores than the RTX 4070 Ti. In this generation, the RTX 5080 has 20% more CUDA cores than the RTX 5070 Ti, as well as much closer memory specifications. So, the 5070 Ti isn’t cut down as much relative to the 5080 when compared to what Nvidia did at the RTX 40 series launch.

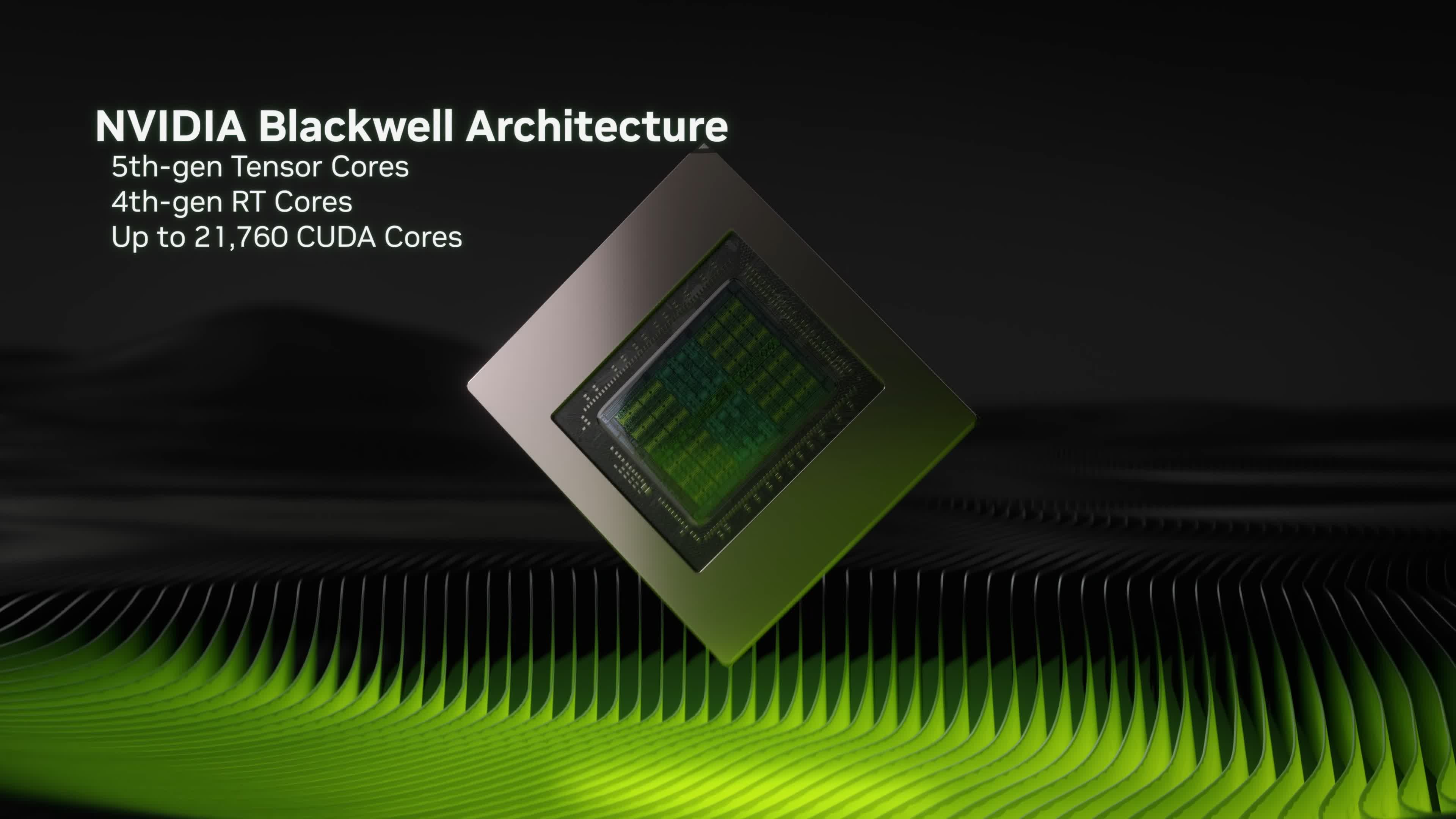

Nvidia’s Blackwell architecture is an iteration on previous architectures, improving various aspects that existed before, like ray tracing units and Tensor cores. Jensen dedicated a ton of time to talk exclusively about AI – we won’t bore you with that – but basically, Blackwell is powerful for AI in addition to regular processing. Shocker, right? A new Nvidia GPU is being advertised as the best for AI yet.

The architecture has a tighter integration with the Tensor cores inside the SM, improved shader execution reordering (which is twice as efficient), RT cores with twice the ray-triangle intersection rate, and enhanced compression to reduce memory footprint. Nvidia claims this will allow for more geometry while ray tracing.

Blackwell also improves video encoding and decoding with a new generation of the hardware engine. This is the first time we are seeing a video decoding engine improvement since the RTX 20 to 30 series upgrade. The new display engine also allows for new multi-monitor configurations: up to four 4K 165Hz monitors without DSC, or two 4K 360Hz monitors with DSC. Two 8K 100Hz monitors with DSC are also possible.

Nvidia is releasing Founders Editions for all models except the RTX 5070 Ti. This FE model looks similar to the RTX 4090 FE cards, except now both fans are on the same side. Amazingly, Nvidia is using just a two-slot design for the 5090 despite the card being rated for 575W. The RTX 5080 appears to use a very similar Founders Edition design, while the RTX 5070 uses a scaled-down model that isn’t as long: 242mm long instead of 304mm long, according to Nvidia’s specs.

DLSS 4 next-gen upscaling tech

Nvidia also announced DLSS 4, the next generation of their upscaling technology. The headlining feature here is frame generation to generate 3 frames for every 1 real frame, instead of generating 1 frame for every 1 real frame.

There are significant question marks here over latency and image quality. When generating every second frame, 50% of the time you are seeing a real frame. When generating 3 frames, only 25% of the time you are seeing a real frame. So, those generated frames had better be amazing quality; otherwise, you will see a ton of artifacts a lot of the time – something that will be an issue unless the quality of generated frames is significantly overhauled relative to DLSS 3.

The other issue is using multi-frame rendering to hack our way to high-frame-rate gaming. Nvidia showed a demo where, without DLSS 4, a game was running at 30 FPS, but then over 240 FPS with DLSS 4 enabled. Now, of course, not even DLSS upscaling was enabled in the 30 FPS example, but we really don’t want to see any situations where generating 3 frames is used to get a 30 FPS game up to 120 FPS – that would be absolutely horrific in terms of input latency.

DLSS 4 is exclusive to Blackwell GPUs, but only for the Multi-Frame Rendering component. DLSS 4 also features enhancements to regular frame generation, super resolution, and ray reconstruction, with the same support for those features as we currently have.

The restriction on multi-frame rendering is due to what Nvidia calls “enhanced hardware flip metering capabilities” in the Blackwell architecture. Nvidia says this is required for a smooth experience, which we hope is true; otherwise, RTX 40 series owners are getting screwed.

The quality enhancements to upscaling and other aspects of DLSS 4 are significant, according to Nvidia, thanks to the use of transformers. Nvidia claims this will “improve image quality with improved temporal stability, less ghosting, and higher detail in motion.”

Honestly, we’re more interested in the upscaling quality improvements in DLSS 4 than multi-frame rendering. Nvidia also says the new frame generation model is 40% faster and uses 30% less VRAM.

That sounds pretty exciting to us, and honestly, we’re more interested in the upscaling quality improvements in DLSS 4 than multi-frame rendering. Nvidia also says the new frame generation model is 40% faster and uses 30% less VRAM. For generating multiple frames, the AI algorithm only needs to run once per rendered frame. Optical flow is replaced with an efficient hardware model, which raises questions as to why frame generation is still exclusive to the 40 series, given it was hardware restricted due to optical flow accelerators in Ada Lovelace.

What’s not clear is whether the new transformer model for DLSS has a performance impact and if this performance impact is the same for all GPUs. For example, they claim frame generation is now faster, but is that on both RTX 50 and RTX 40 GPUs? Is there a performance impact from using the transformer model for upscaling? Many questions to be answered in testing.

Nvidia’s gaming software moat

The fact that 75 games and apps are coming at launch with DLSS 4 support is quite impressive, showing Nvidia has learned that widespread game support is needed to make features worthwhile.

We don’t know if this means there will be significant game updates at launch for all these titles or whether Nvidia has found some way to automatically add DLSS 4 to these games. But after a quick look through the list, it isn’t every DLSS 3 game, and some games are only adding DLSS 4 support later. This suggests a widespread effort to implement the feature.

In addition, Nvidia is building an override feature into the Nvidia app, which will act like a DLL swap, allowing upgrades (or overrides, as they call them) to the latest DLSS models for frame generation, super resolution, and ray reconstruction. It will also allow frame generation to turn into multi-frame generation for 50-series owners.

Nvidia has announced Reflex 2, which essentially brings frame warp from VR gaming to regular PC gaming for the first time. The idea here is that instead of showing the rendered frame based on old input, Reflex 2 will take a more recent input sample, perform calculations on the CPU, and warp/shift the rendered frame based on that input.

In a sense, this “fakes” a frame update and applies it to the rendered frame, reducing perceived latency for actions that shift the camera because real input is being taken into account to warp the frame by the correct amount. It will not reduce latency for game actions like firing your gun, for example, because that cannot be warped.

It’s a cool concept, but it remains to be seen how it actually works in games. Will it feel nice to play a game with Reflex 2 enabled, or will it feel a bit floaty and weird if the warp calculations aren’t accurate? It’s hard to say at this point, although it’s good to see Nvidia claiming gains for both CPU- and GPU-limited scenarios.

There’s also the question of image quality, and while Nvidia claims they are using inpainting to solve issues with gaps in the warped render, every single frame is affected by this warp. So, if the quality of the warp is bad, this will make the entire game look bad all the time.

Reflex 2 is first coming to The Finals and Valorant soon, with support for RTX 50-series GPUs initially and other RTX GPUs later. So, it doesn’t sound like this will be something restricted to new Blackwell models. However, it’s important to note here that frame warp in Reflex 2 will not solve latency issues with DLSS frame generation, especially multi-frame generation, because there is no indication it will be integrated into games that support DLSS 4 multi- or single-frame generation. It might not even work together, for all we know.

Blackwell also supports RTX Neural Shaders and RTX Neural Faces, though we’re not sure how soon we’ll actually be seeing those in games, so it seems more like a future-looking feature for now.

Nvidia claims that RTX Neural Shaders can be used to compress textures by up to 7x, saving memory, which is perhaps why they’re still being stingy with VRAM this generation. But of course, this feature is absolutely useless in games that don’t use it, so this isn’t an actual excuse for the low VRAM amounts on the RTX 5070 in particular.

That’s everything Nvidia has announced at CES 2025 for PC gamers. The new line-up of GeForce RTX 50-series graphics cards looks reasonable at first glance, but it certainly didn’t blow us away. Performance gains look reasonable once you strip out all the BS marketing about new features, the value increase looks modest to okay, and the hardware itself is impressive.

The exception to this would be the RTX 5070, which has just 12GB of VRAM at $550 – too low for that class of graphics card in 2025, even though Nvidia is claiming reduced memory usage through new features. Some games already show that 12GB is insufficient even without DLSS applied, and since most of the VRAM savings come through DLSS 4 model updates, if the base game still doesn’t work with 12GB of VRAM, it doesn’t matter if frame generation is a little more memory efficient.

It’s always positive to see that pricing isn’t ridiculous or outrageous. This doesn’t appear to be a repeat of the RTX 40 series, where gamers were treated to little to no increase in value and shockingly high prices for some models. Of course, we still need to see healthy performance gains from those models to get the required value increase.

DLSS 4 sounds good on paper, and we’re looking forward to see those claims about improving the quality of upscaling. The ability to upgrade the DLSS model in existing games through an Nvidia app feature is also neat as many gamers were performing manual DLL swaps.

As for multi-frame generation, we’re not sold on that feature yet given the implications for image quality and latency… especially if it’s used as a crutch to allow for native rendering at even lower frame rates. And we absolutely hate how we’re now in a situation where Nvidia can just claim ridiculous performance gains by generating more and more frames. Rest assured we’ll investigate this further when we have the GPUs on hand and get to testing.